Auditing in SSIS can be a real headache. In this two part series blog I am going to try to make it a little easier. You can also download my white paper and sample files on Auditing SSIS here. You can also see this post and more at SQL University. Part 1 of the blog is here.

Creating a Custom Auditing Framework

Creating an auditing solution is time consuming but once built gives you the detailed information you want in your table and allows you to filter unnecessary data. You will create this custom auditing by adding tasks to the Event Handlers of the package.

Event Handlers

There are several event handlers listed under the event handler tab. Click on the event handlers tab at the top of the example package and you will see two drop down menus at the top. On the left there is a drop down with the tasks in the package and the package itself as seen in figure 8. You can create specific auditing for each task if desired. In this example you will create auditing for the entire package, so ensure the package name is selected.

Figure 8

The right drop down menu contains the events available for the package. Select the onError event handler and click the blue link in the center to create the onError event handler. Before you can start auditing you will need to create a table to write the data too. For this example you will be auditing the package name, task name, error description, and the date. Open SSMS and run the following SQL in your auditing database to create the auditing table.

CREATE TABLE [SSISErrorLog](

[RunTime] [datetime] NULL,

[Package] [varchar](500) NULL,

[Task] [varchar](500) NULL,

[Error] [varchar](500) NULL

) ON [PRIMARY]

Execute SQL Task

Now you are ready to insert data into this table. Before we insert data we need to create one more variable. There is a problem with the date format in SSIS. The DateTime format in SQL is different than the System variables in SSIS. The format in SQL is 1900-01-01 00:00:00.000, and the format in SSIS is 1/1/1900 12:00:00 AM. So you will need to convert the SSIS date to the SQL format. To do this, create a variable on the package named strStartTime and set the type to string. Set the variable to evaluate as an expression in the properties of the variable. Click on the expression ellipsis and enter the following code:

(DT_WSTR, 10)(DT_DBDATE)@[System::ContainerStartTime] + ” ” + (DT_WSTR, 8) (DT_DBTIME)@[System::ContainerStartTime]

This is the SSIS Script language. It will convert the start time of the current container to a format SQL will recognize.

Go back to the package onError Event Handler. Drag in an Execute SQL Task. Open this task and set the connection to the testing database. Enter the following SQL into the Execute SQL Task. Notice the convert function used to convert the string value of the date to a datetime for SQL.

INSERT INTO SSISErrorLog

([RunTime]

,[Package]

,[Task]

,[Error])

VALUES(CONVERT(datetime,(?)),?,?,?)

Click on the Parameters tab of the Execute SQL Task and enter the parameters as shown in figure 9 below. Notice the first parameter is the variable you create previously, the rest are system variables. Click ok to close the task and return to the control flow of the package.

Figure 9

You will need to cause an error in the package to have the Event Handler fire. Open the first Execute SQL Task in the For Each Loop and put the letter ‘X’ in front of the SQL command. This will cause a syntax error. Run the package. The package should fail. Open SSMS and query the SSISErrorLog table and you should see the data from the package run as seen in figure 10 below.

Figure 10

If you do not see any data, return to the package and look under the Progress/Execution Results tab and find the error on the event handler. It should tell you why the insert statement failed.

Expressions

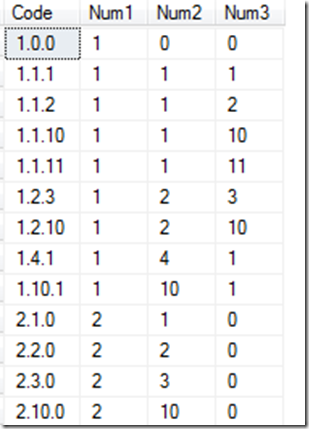

This was a simple example of writing data to a table to audit a package. You can use more variables and expressions to make the package more customized. For example you can create some of the variables below and use the corresponding expressions. These variables would be the parameters in the Execute SQL Task instead of the system variables. Of course you would need to alter your table to write these new columns.

|

VariableName |

Variable Type |

Expression |

|

strUser |

String |

@[System::MachineName] + “\\” + @[System::UserName] |

|

strDate |

String |

(DT_WSTR, 10) (DT_DBDATE) @[System::ContainerStartTime] |

|

strPackageLoc |

String |

@[System::MachineName]+ “\\”+@[System::PackageName] |

|

strExecBy |

String |

@[System::InteractiveMode] == true ? @[System::UserName] : @[System::MachineName] |

You can see by this small example that creating and maintaining a robust auditing solution will take quite a bit of time. This type of solution would need to be added to every package in your environment that you need to audit. You can use a package as a template and make any adjustments to the auditing as needed during package development.

To avoid this time consuming work, you can use a tool by Pragmatic Works that can do this work for you. That tool is BI xPress.